Teams that run models on Fireworks AI, AWS Bedrock, Google Vertex AI, or Databricks can now connect them directly to SuperAnnotate through Agent Hub. The update lets you run your existing models inside annotation and evaluation workflows without switching tools or building custom bridges.

From pre-labeling to quality assurance and evaluation, using models to streamline human review can improve quality and reduce time-to-delivery. Together, humans and models in the loop enable high-quality data at scale, bringing your AI products to market faster.

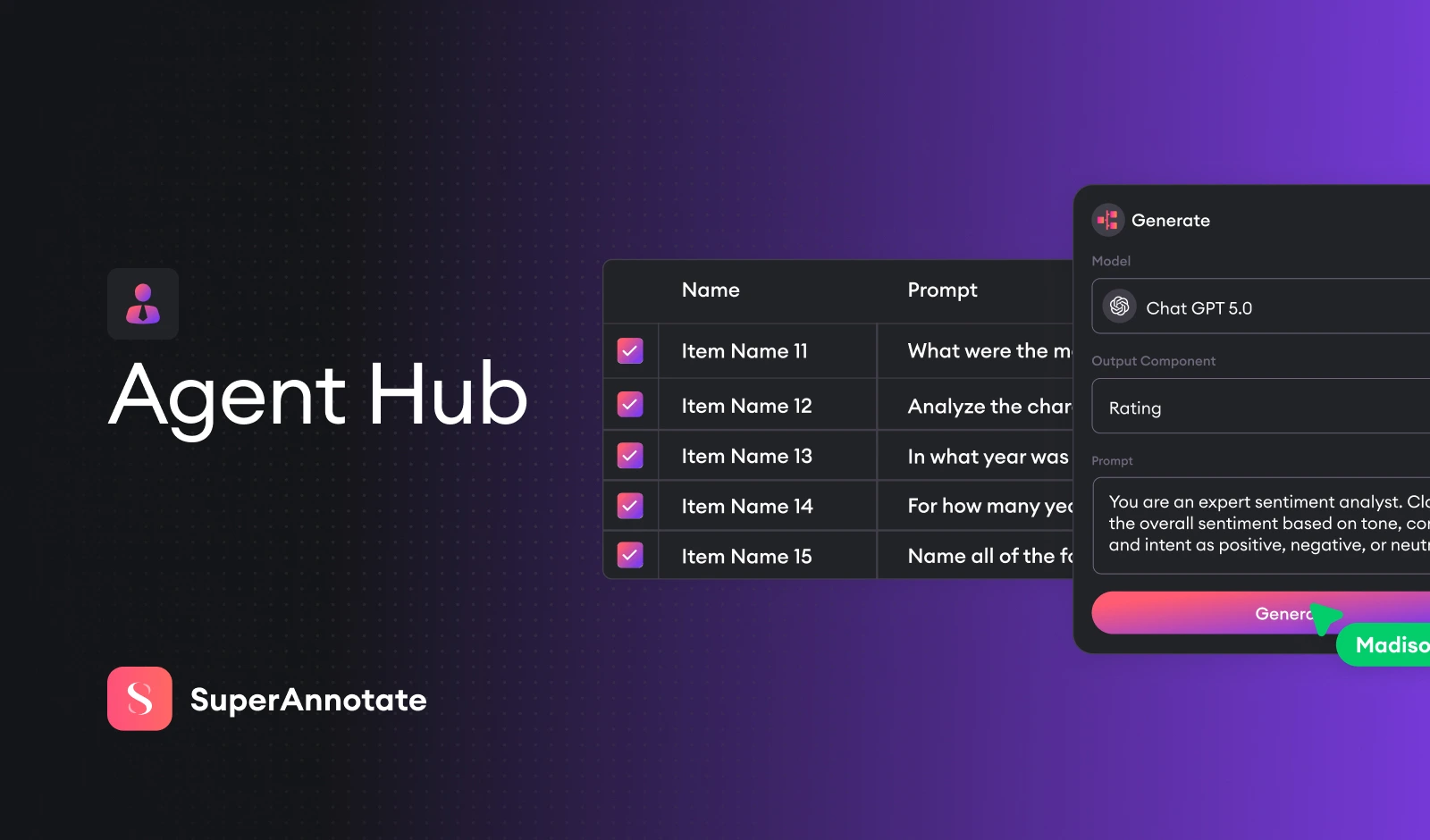

Connect Your Models for Faster Labeling with Agent Hub

Human expertise is critical for building high-quality AI systems. Yet many teams spend part of their valuable review time on simple cases that could be automated. Agent Hub bridges this gap by connecting directly with leading enterprise AI platforms that host and serve models, enabling partial or full automation of many simpler labeling tasks while ensuring expert reviewers focus on the trickiest edge cases.

Every enterprise has its own approach to model hosting and deployment. Whether your models run on Fireworks AI, AWS Bedrock, Google Vertex AI, or Databricks, Agent Hub connects to them all, so you can keep using the platforms you rely on today.

With models in the loop, Agent Hub brings together automation and human review to create scalable, high-quality data pipelines. This hybrid approach helps enterprises:

- Accelerate time-to-market

- Multiply expert productivity

- Maximize ROI of AI initiatives

Connecting models to labeling and review systems while keeping humans in check lets teams ship faster without compromising quality.

Try Agent Hub Today

Speak with our team to learn how SuperAnnotate’s Agent Hub accelerates data workflows and enables organizations to build high-quality data for training and evaluation.