Platforms like Fireworks AI make it easier to run inference and customize generative AI models. But the old machine learning adage still applies: garbage in, garbage out. No matter how advanced the base model, its performance depends on the quality of the data used for Supervised Fine-Tuning (SFT) and Reinforcement Fine-Tuning (RFT).

SuperAnnotate makes it simple to build efficient, human-in-the-loop data and evaluation pipelines, helping teams create the high-quality datasets needed to train better models. With our new Fireworks AI integration, you can now move from trusted data to customized, ready-to-deploy models in minutes.

In this guide, you’ll learn how to:

- Export Q&A data from SuperAnnotate to Fireworks

- Fine-tune a base model using SFT

- Align model behavior through RFT

Automating Workflows with Orchestrate

As AI projects grow, managing data flow and task coordination becomes harder to do manually. Orchestrate solves this by automating workflows inside SuperAnnotate. It links project events to actions, helping teams achieve consistent and repeatable processes. You can combine built-in steps with your own Python code and trigger them automatically when events occur – like a dataset update, annotation completion, or stage change.

There are a few key ideas to understand before building with Orchestrate.

- Custom Actions: Bits of Python code you write and run inside SuperAnnotate. Each action executes in an isolated container whenever the pipeline triggers it.

- Secrets: Secure environment variables (like API keys or tokens) that your actions can access at runtime. They let you keep sensitive values out of your code and logs.

- Manual Inputs: Parameters you provide at runtime when manually triggering a pipeline. They let you override or add values (like a dataset ID or project name) without hardcoding them into your action or secrets.

You can read more about Orchestrate, Custom Actions, Secrets, and Manual inputs in our documentation.

We’ll now look at three production-ready custom actions you can publish. Each section explains:

- What the action does.

- The environment variables (secrets) needed.

- The manual inputs you should provide at runtime.

- Key template details to help you adapt it to your workflows.

1. Uploading Datasets to Fireworks AI

You’ve built a high-quality dataset in SuperAnnotate, now it’s time to get that data into Fireworks AI so you can start fine-tuning your models.

This prebuilt custom action automates the entire process:

- Retrieve annotations from the SuperAnnotate project of your choice

- Convert them into the chat message format Fireworks expects

- Create or update a Fireworks dataset (append if it already exists)

How to Add This Action to Your Project

Inside SuperAnnotate, go to Orchestrate → Actions → New Action → Create Action, then select Upload to Fireworks AI. Once created, you can customize the details and use it in your pipelines.

This action assumes that you have question_area and answer_area fields in your project. If your fields have different names, you need to either change them in the code or in your project.

Configuration

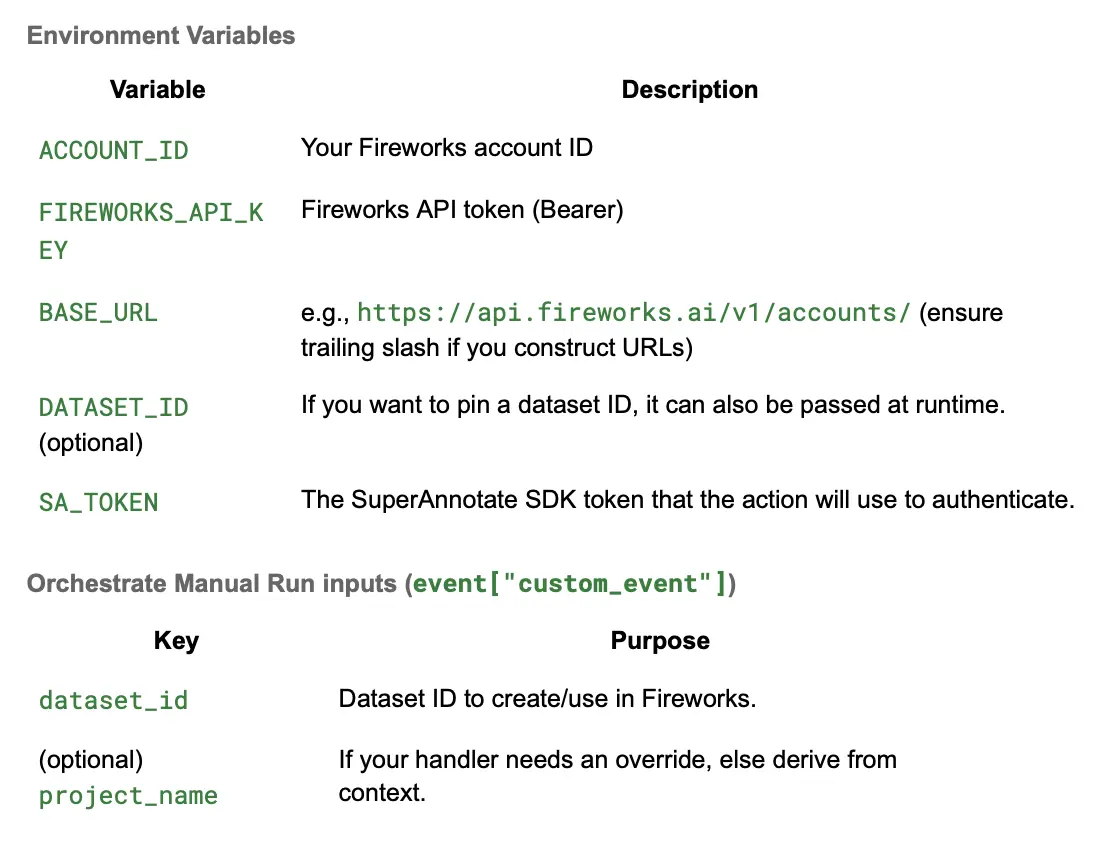

This action needs two things: environment variables (set once) and manual inputs (passed when you run it).

Template Highlights

- Formatter converts question_area / answer_area instances to:

{"messages":[{"role": "user", "content":"<Q>"},{"role" :"assistant", "content":"<A>"}]} - Upload uses either Fireworks REST (: upload) or the Python SDK Dataset.from_list(...).sync() (idempotent).

- If you see 409 Conflict, treat it as “already exists / in-flight create”, log and continue the upload.

2. Supervised Fine-Tuning on Fireworks AI

If you work through multiple rounds of model training – fine-tuning, evaluating, updating the dataset, then fine-tuning again – switching between platforms can slow you down. This action lets you launch a Supervised Fine-Tuning (SFT) job in Fireworks directly from your SuperAnnotate workflow.

How to Add This Action to Your Project

Inside SuperAnnotate, go to Orchestrate → Actions → New Action → Create Action, then select Fireworks AI- Supervised Finetuning. Once created, you can customize the details and use it in your pipelines.

Configuration

This action needs two things: environment variables (set once) and manual inputs (passed when you run it).

Template Highlights

- Builds payload with dataset, outputModel, baseModel, and training params (epochs, LR, LoRA rank, etc.).

- Returns the Fireworks API response text for tracking.

3. Reinforcement Fine-Tuning on Fireworks AI

Once you’ve fine-tuned your base model with supervised data, the next step is often aligning its behavior with your specific policies, preferences, or reward signals. That’s where Reinforcement Fine-Tuning (RFT) comes in.

This custom action lets you start an RFT job in Fireworks directly from your SuperAnnotate workflow:

- Use your prepared dataset to begin alignment training

- Add a custom evaluator (reward function) to guide model outputs

- Automatically reserve evaluation data to track performance during training

You don’t need to manually upload datasets, configure jobs in Fireworks, or manage API calls. Running this action lets you move from supervised to reinforcement fine-tuning in one connected workflow.

How to Add This Action to Your Project

Inside SuperAnnotate, go to Orchestrate → Actions → New Action → Create Action, then select Fireworks AI - Reinforcement Finetuning. Once added, you can edit parameters like base model, learning rate, and evaluator ID to fit your use case.

Configuration

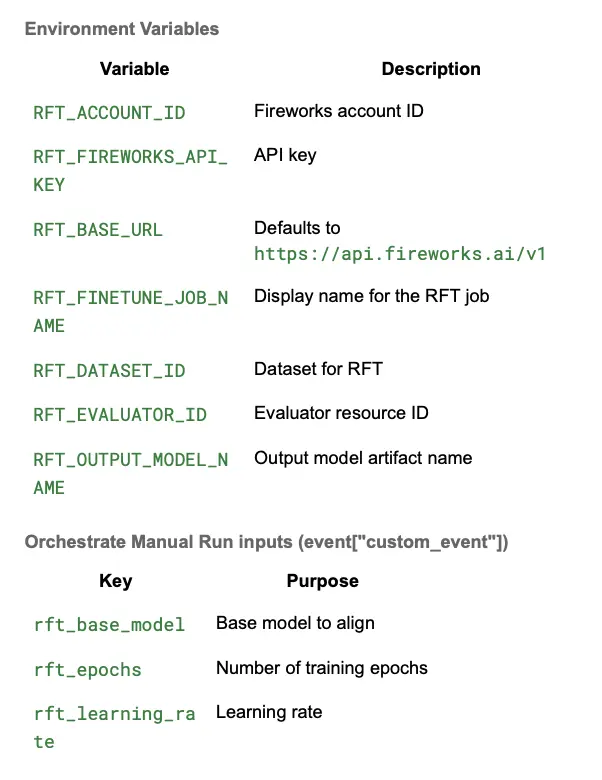

This action needs two things: environment variables (set once) and manual inputs (passed when you run it).

Template Highlights

- Constructs trainingConfig with baseModel, outputModel, learningRate, and epochs.

- Includes evalAutoCarveout: true to reserve eval data automatically.

Tips & Troubleshooting

Even with prebuilt templates, it’s common to hit small issues when setting up pipelines or scaling to larger datasets. Here are a few possible errors and how you can manage them to keep your workflows stable:

- 409 Conflicts: If you create a dataset/job that already exists, interpret as idempotent – log and continue to the next step.

- SuperAnnotate Schema Mismatches: If your instances lack className or different keys (e.g., classTitle), adjust the formatter safely (use .get(...) and skip non-matching instances).

- Variable Errors: Set up all environment variables and required inputs correctly and make sure they are precisely parsed in the code.

- Large files (>150MB): Use Fireworks signed URL flow (:getUploadEndpoint → PUT →:validateUpload); otherwise, direct: upload is the simplest.

- Observability: Add logging around payloads and response bodies.

Closing Notes

Bringing SuperAnnotate and Fireworks AI together creates a smoother path from data to deployment. SuperAnnotate handles data and quality control, Fireworks handles the training infrastructure, and Orchestra automates the data-to-model workflows. Instead of managing each step by hand, teams can rely on clear, automated flows that keep projects moving and models improving with every new batch of data.