As organizations race to deploy AI and agents, many hit the same roadblock: accuracy isn’t good enough for production. Off-the-shelf models, no matter how advanced, lack the deep, contextual knowledge that makes your business and your use case unique. Without this expertise, agents fail to meet the reliability standards required for real-world use.

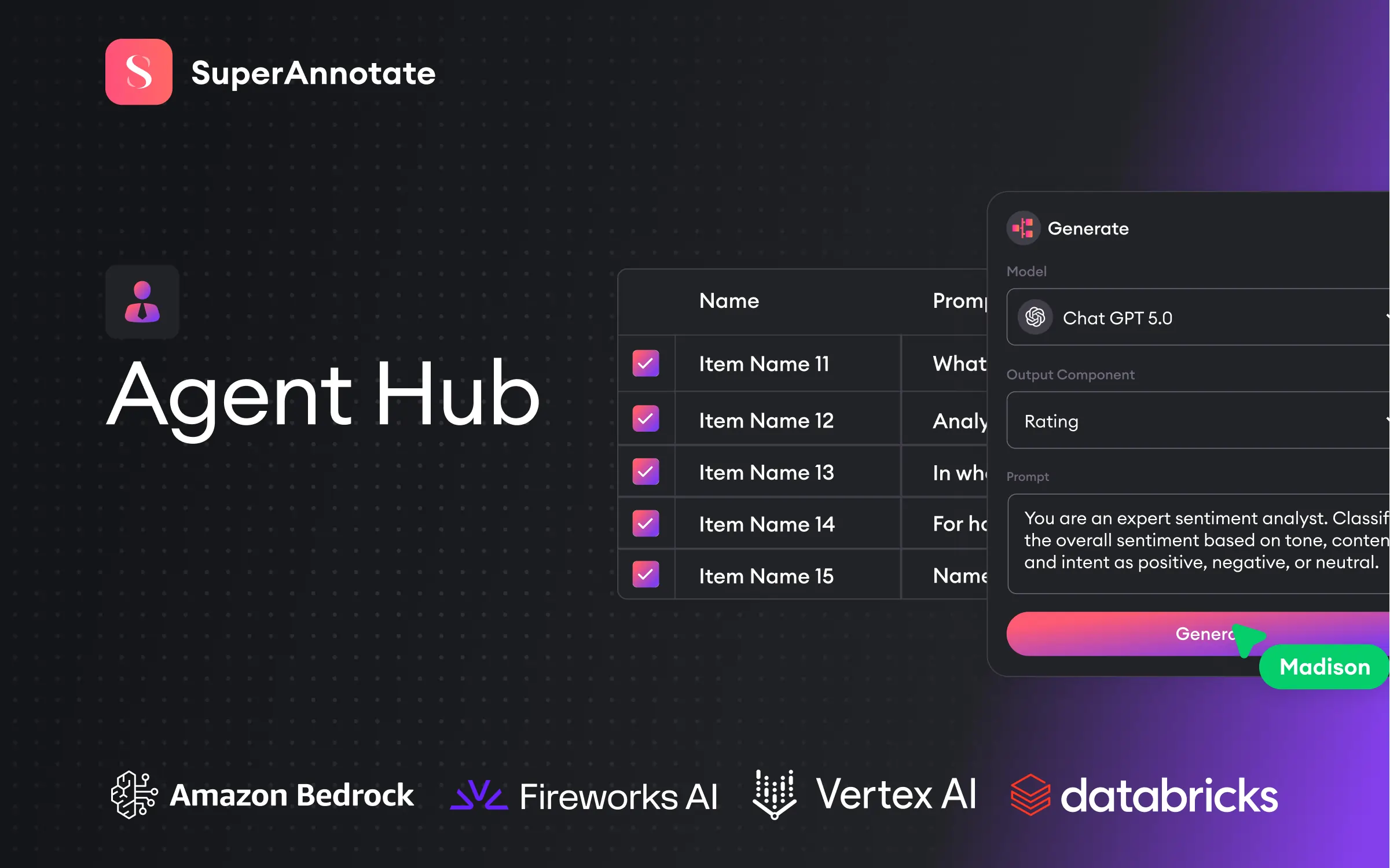

Much of this knowledge sits with subject-matter experts working in the business day-to-day, making it difficult to systematically embed expertise into AI development. To succeed, organizations need a way to combine automation with targeted human input, maximizing the impact of expert oversight without slowing teams down. That’s why we built Agent Hub: a new way to put models directly into the loop, pairing AI agents with human expertise in one unified platform.

By bringing agents directly into your data workflows, from curation and pre-labeling to annotation and evaluation, Agent Hub frees your teams to focus on high-value expert review instead of repetitive tasks.

With Agent Hub, you can:

- Accelerate time-to-market: reduce labeling and evaluation time by up to 80%.

- Multiply expert impact: Automate repetitive tasks and make expert contributions 5× more efficient.

- Maximize ROI of AI initiatives: Build agentic AI that actually works faster than ever.

How Agent Hub Works

Agent Hub lets you combine scalable AI agents and expert human review in one place. Using native integrations with leading foundation model providers, such as OpenAI, Anthropic, and Google Gemini, as well as with enterprise AI platforms like AWS Bedrock, Databricks, Fireworks AI, and Vertex AI, you can create custom data agents that speed up every part of the data workflow.

Data agents empower annotators and QA teams with intelligent suggestions and quality checks, while enabling ML and data engineers to build and optimize hybrid (human & LLM Judge) evaluation pipelines. By scaling human feedback with data agents, you streamline the production of high-quality AI and create data flywheels that keep AI systems accurate and reliable over time.

See Agent Hub in Action

Watch our demo to learn how to leverage agents across curation, annotation, and evaluation. Whether you’re building agentic systems, GenAI applications, or multimodal models, SuperAnnotate’s Agent Hub helps you deploy human feedback pipelines that are automated, scalable, and production-ready.

Case Study

We tested this hybrid evaluation workflow with a large enterprise customer, using 148 chat conversations that would normally require 81 hours of manual review. With weekly volumes of 8,000 - 10,000 items, the customer needed a faster, scalable approach.

Our hybrid LLM-Human evaluation solution combined NeMo Evaluator’s LLM-as-a-Judge with SuperAnnotate’s human-in-the-loop framework. GPT‑4o and Llama‑3.3‑70B served as the “LLM Jury,” each with the same prompt applying the same expert instructions and guidelines. If the models agreed, the result was accepted automatically; if they disagreed, the case was escalated to a subject‑matter expert.

This setup demonstrated that the hybrid method delivers major time savings with only minimal trade‑offs in quality - making it a viable option for balancing cost, speed, and accuracy based on the project's quality requirements and risk tolerance. By blending automation with targeted expert review, teams can balance cost, speed, and accuracy while ensuring reliable evaluation outcomes for downstream models.

Book a meeting to see how SuperAnnotate Agent Hub can help you build.