SuperAnnotate is giving customers faster, connected ways to build, label, and evaluate AI datasets. Through an integration with NVIDIA technology, we’re streamlining data pipelines for model development so teams reduce manual effort, improve data quality, and scale AI production with confidence.

These efforts integrate NVIDIA NeMo microservices with SuperAnnotate’s human in the loop platform, taking form in multiple efforts built for enterprise use.

Improving LLM Judge Accuracy with NVIDIA NeMo Evaluator in SuperAnnotate

LLM judges can dramatically speed up model evaluation workflows by automating large-scale assessments, but their usefulness depends on close alignment with human judgment. One of the biggest challenges for AI teams is ensuring that LLM Judge outputs are aligned to ground truth. By enabling LLM judges to accurately reflect human judgment, teams gain faster time-to-model, deeper insights, and much greater confidence in every model release.

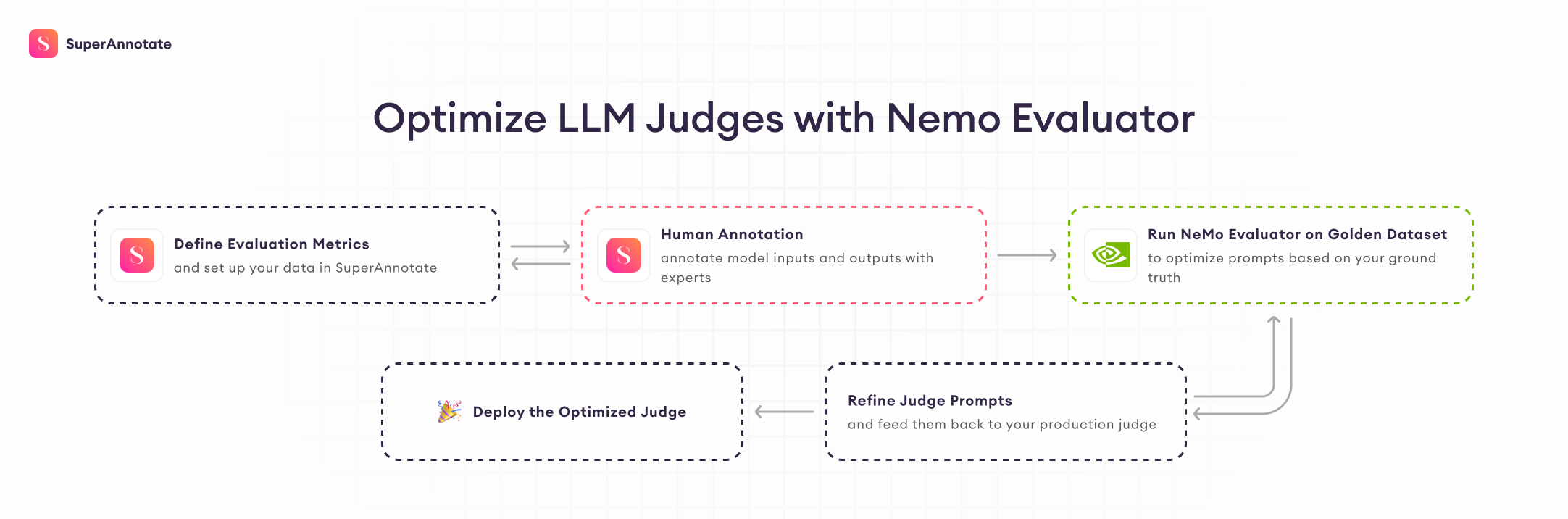

SuperAnnotate creates a seamless workflow where automated judging is firmly grounded in human judgment, solving a critical blocker for production AI. SuperAnnotate’s model evaluation solution, powered by NVIDIA, works in the following manner:

- SuperAnnotate’s platform enables detailed human review, creating a structured dataset that serves as the golden standard for the automated judge. It gives the judge the clear standard it needs to perform accurately and consistently.

- NVIDIA NeMo Evaluator takes the judge’s initial scores and compares them point-by-point against the human-labeled gold standard provided by SuperAnnotate. By aligning the prompt with the human gold standard, NeMo Evaluator cuts down the amount of manual iteration usually required to reach that level of accuracy. It refines the prompt based on points of disagreement and improves the judge’s output over time.

Here is a more detailed look at how the evaluation flow comes together inside SuperAnnotate.

- Define your evaluation metric and set up your data in SuperAnnotate.

- Explore your dataset and annotate it with your experts.

- Use a basic prompt for judge evaluation.

- Run NeMo Evaluator to optimize the judge prompt based on your annotated dataset.

- Compare and iterate your prompt by comparing it to your ground truth.

- Feed the optimized prompt back to your production judge.

- Use your judges with SuperAnnotate Agent Hub to evaluate data or speed up quality assurance for annotation projects.

Through this solution, enterprises gain:

- Faster, more reliable evaluation: Automated prompt calibration reduces manual work and improves alignment between human and AI judgment.

- Scalable workflows: Enterprises can evaluate multiple models and datasets simultaneously, without bottlenecks.

Fine-Tuning NVIDIA Nemotron Models with Labeled Data for Improved Reasoning Performance

Fine-tuning NVIDIA Nemotron models with data labeled in SuperAnnotate gives teams a direct way to improve model performance on tasks that demand unique or domain-specific reasoning. Many enterprise workflows rely on models that must interpret subtle visual details, follow custom logic, or handle edge cases. By fine-tuning, models learn how to handle their proprietary edge cases, follow internal taxonomies, and make decisions aligned with unique company requirements. This transforms a broadly capable model into one that consistently performs on special reasoning tasks.

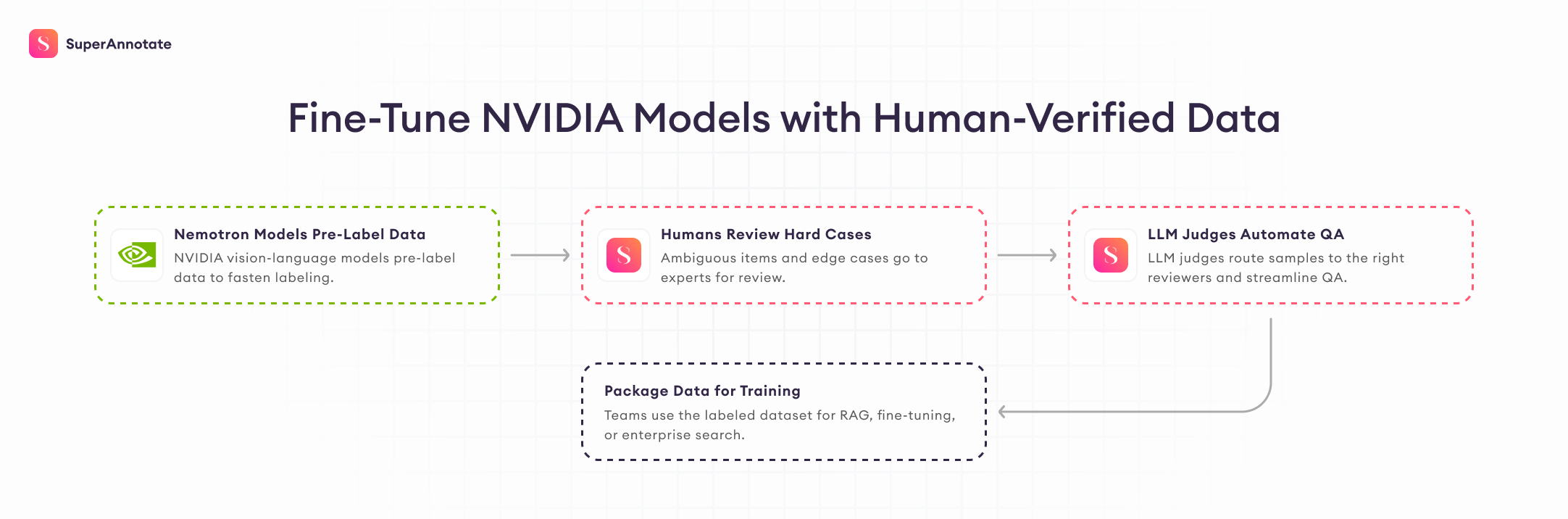

With SuperAnnotate’s human-in-the-loop workflows connected to NVIDIA NeMo, the entire process becomes efficient and scalable. NVIDIA Nemotron models generate an initial pass of predictions, and teams can curate a fine-tuning dataset in SuperAnnotate with human reviewers. By fine-tuning Nemotron models with high-quality human reviewed datasets, organizations can significantly boost accuracy for their specific tasks. The result: fine-tuned NIM microservices that perform better, learn faster, and deliver reliable reasoning tailored to each company’s most important use cases. The fine-tuned Nemotron models can then be deployed as an NVIDIA NIM microservice.

Here is how the setup for this flow comes together inside SuperAnnotate.

- Annotators in SuperAnnotate use NVIDIA Nemotron Nano 2 VL to find helpful training data and get pre-annotations to review and correct.

- SuperAnnotate routes only ambiguous predictions to human reviewers. Because the VLM handles the majority of parsing work, reviewers focus only on the difficult, high-value edge cases.

- LLM-judges are used to reduce quality assurance efforts by routing samples to the right people depending on the results.

- The final structured dataset is exported for retrieval, fine-tuning or RAG. Customers can use the output for fine-tuning domain-specific document models, grounding RAG pipelines, or building enterprise search systems for example.

NVIDIA built this setup inside SuperAnnotate and put the workflow into practice, resulting in a 2x reduction in human review time across all workflows.

With this setup, teams can dramatically reduce enterprise data labeling time and cost, making it possible to create large training datasets quickly and reliably for retrieval, reasoning, and fine-tuning workflows.

A Connected Ecosystem to Accelerate AI Development

Building more accurate and reliable AI systems requires a combination of human expertise and leading model infrastructure. SuperAnnotate and NVIDIA bring these capabilities together in a single integrated ecosystem, making it easier to develop, evaluate and refine models end-to-end.

SuperAnnotate’s human-in-the-loop workflows powered by NVIDIA AI infrastructure work in sync to collect high-quality feedback, validate outputs, improve datasets, and fine-tune models with precision. The result is a continuous improvement loop that drives stronger models and high-performing AI systems.

Special Collaboration: Accelerating ASL and Gesture Recognition Together

SuperAnnotate, Amazon Web Services (AWS), and NVIDIA are collaborating to support the broader ASL community with scalable, accessible dataset creation tools. As part of the ongoing mission to help teach and curate the world’s largest dataset for American Sign Language (ASL), SIGNS helps users learn sign language through their webcam with real-time feedback from an AI-driven 3D digital human. The consumer-facing platform also allows fluent signers to contribute video content back to a public dataset.

In collaboration with the American Society for Deaf Children, Hello Monday/DEPT®, AWS, NVIDIA, and SuperAnnotate, SIGNS advances research and commercial work in sign language recognition and user experience that support the Deaf community.

- Customers gain access to a complete ASL annotation environment powered by NVIDIA and AWS.

- MediaPipe pre-labeling automatically generates body, face, and hand keypoints for ASL videos.

- SuperAnnotate’s multimodal editor enables fast refinement of landmarks with minimal manual work.

- This workflow reduces annotation time by more than 90%, enabling rapid creation of high-quality gesture datasets.

Commercial and research teams can request free access to label thousands of ASL samples, accelerating progress for ASL and gesture AI.

Final Thoughts

SuperAnnotate’s work has been growing around the needs of teams that handle large volumes of data and expect clearer evaluation steps. Each effort adds more structure to that path and brings more of the workflow into one place. It has turned into a practical way for enterprises to move through data and model work without losing time or quality.

Start building your own data workflows with SuperAnnotate.