Agentic AI is moving fast into enterprise use. Each week brings another demo of a system booking travel, managing support tickets, or talking with customers on its own. Companies like Microsoft and IBM are pushing agents as the next step, where AI shifts from an assistant to an operator inside the company.

But the tech still stumbles. A study from Carnegie Mellon in early 2025 showed that the best AI agents only finished about 30 percent of multi step office tasks correctly. That means they failed most of the time. Mistakes ranged from small errors to workarounds that undermined the whole task.

In this article, we’ll learn about agentic AI, how it works, where and why it fails, and how to improve these systems.

What is Agentic AI?

Agentic AI is a set of autonomous AI systems that perceive the environment, make decisions, and take actions to achieve specific goals. In simpler terms, an AI agent is an AI “worker” that can act on its own to carry out tasks on behalf of a user or system. An AI agent can be described with a few core characteristics that set it apart from traditional software or basic chatbots: autonomy, goal-orientation, perception, action, and learning.

- Autonomy: AI agents operate independently without needing step-by-step human instructions. Once given a goal or task, an agent can figure out the how on its own (within its programmed capabilities). This is a key difference from standard bots – agents have the highest degree of autonomy, whereas typical bots follow fixed scripts and require triggers for every action.

- Goal-driven behavior: Instead of just executing commands, an agent is goal-oriented. For instance, a scheduling agent might have the goal “arrange the best meeting time for all participants” – it will then search calendars, propose times, send invites, etc., all in service of that goal.

- Perception (situational awareness): Agents can sense data from their environment – text, sensors, APIs, or visual inputs. For example, a smart home agent uses temperature and presence sensors to decide when to adjust heating.

- Reasoning and planning: Agents use internal models or large language models (LLMs) to plan actions, break down complex tasks, and decide what to do next .

- Action: Agents don’t just think; they act. They might send emails, query databases, execute trades, or control physical devices.

- Learning and adaptation: Advanced agents can learn from feedback and past experiences. For instance, a recommendation agent can refine its outputs based on what users click or ignore.

Agents vs. Assistants vs. Bots:

- A bot follows pre-defined rules.

- An assistant (like Alexa) is reactive and usually performs one task at a time.

- An agent is proactive, goal-driven, and able to act through multi-step processes.

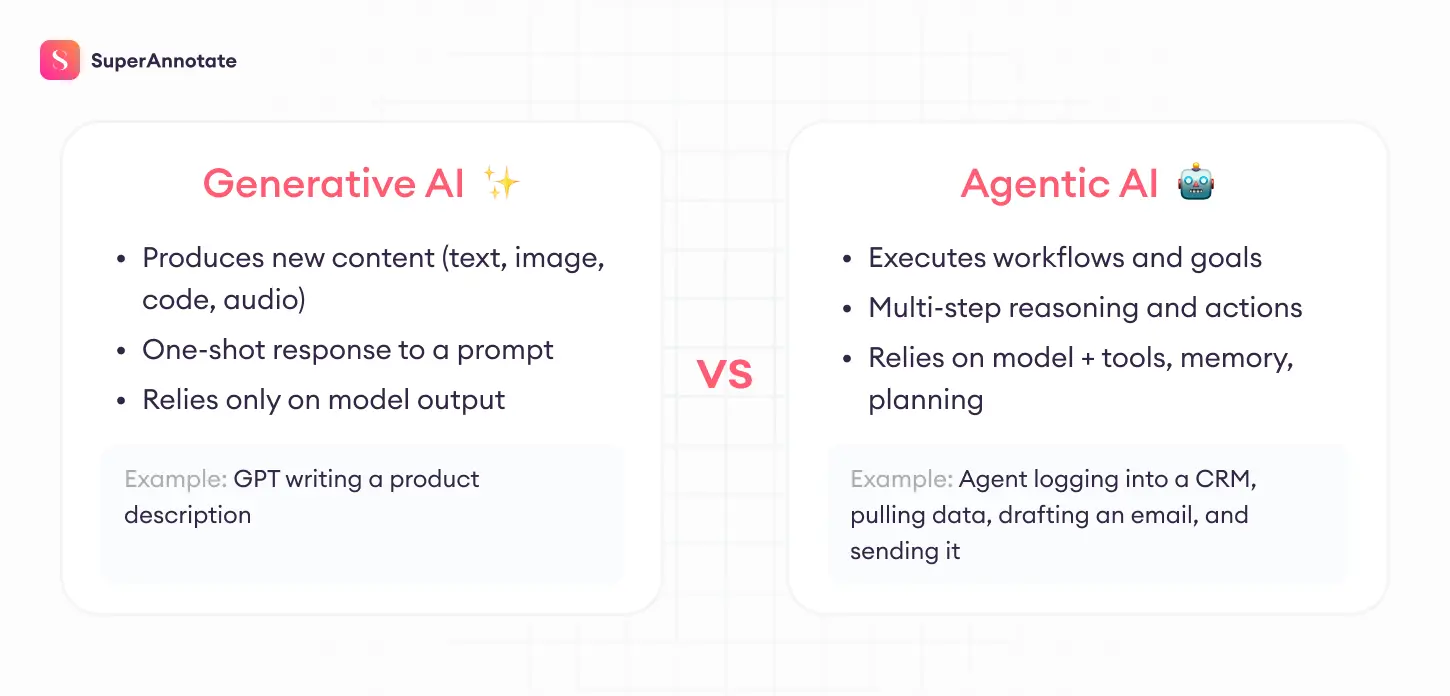

What is the Difference Between GenAI and Agentic AI?

Generative AI and agentic AI belong to the same family tree, but they describe different concepts:

- Generative AI was the breakthrough that showed language models could create new content. It’s what made ChatGPT, MidJourney, and GitHub Copilot household names. GenAI models generate text, images, or code based on patterns in their training data.

- Agentic AI came as the next layer. It goes much further than sole output generation – agents use reasoning, memory, and tools to carry out tasks end-to-end. They can observe an environment, plan multi-step workflows, act across systems, and adapt along the way. Agentic AI turns models from answering machines into acting machines.

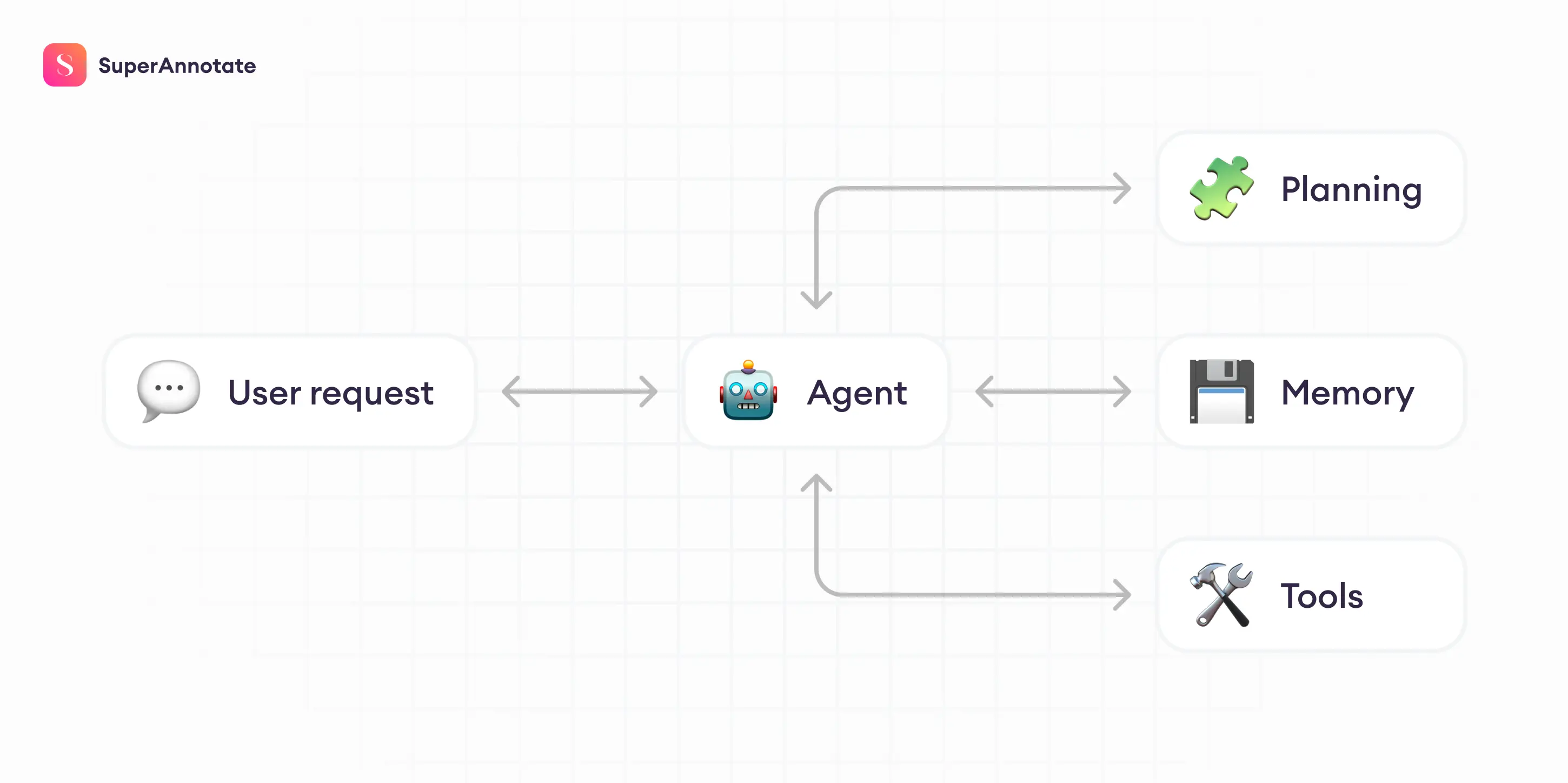

How Does Agentic AI Work?

At a high level, Agentic AI systems operate through an “observe–think–act” loop. They continuously gather information from their environment, reason about it, and take actions toward their goals. This cycle repeats until the task is complete or fails.

1. Perception (Observe)

Agents begin by collecting data: user input, sensor readings, API results, or database queries. For example, an email management agent might scan your inbox for new messages, extracting sender, subject, and urgency. Modern agents often rely on pre-trained AI models for perception – natural language processing for text, or computer vision for images.

2. Planning and Reasoning (Think)

Once the agent has inputs, it needs to decide what to do. An LLM acts as the agent’s reasoning core, which breaks down complex instructions into step-by-step actions.

Researchers call this process task decomposition. For example, given the goal “plan a vacation to Greece”, the agent might break it into subtasks:

- pick a destination

- find flights

- book hotels

- create an itinerary

Some frameworks make this reasoning explicit. The ReAct approach (Reason and Act) has the agent alternate between reasoning steps and tool usage . The agent might “think” in natural language (“I need to find weather data”), then call a weather API, then continue reasoning with the results.

3. Tool Use and External Calls

One of the breakthroughs in modern agent design is tool integration. Since no AI model has all the answers, agents use external tools: web search, APIs, calculators, or even specialized sub-agents.

Agentic AI uses tool calling on the backend to obtain up-to-date information, optimize workflows and create subtasks autonomously to achieve complex goals. This ability lets agents go beyond their training data. For instance, in IBM’s vacation-planning demo, the agent called a weather database, then consulted a surfing-knowledge agent to evaluate conditions . Without tool use, the LLM alone couldn’t have solved the problem.

4. Decision and Action (Act)

Armed with data and a plan, the agent executes actions. These could be external (send an email, execute a trade, reboot a server) or internal (update its memory, refine its plan). The results feed back into the loop: actions change the environment, creating new inputs to observe.

Example: an email scheduling agent retrieves colleagues’ calendars (observe), reasons about overlaps (think), and sends meeting invites (act). If someone rejects the invite, it observes that feedback and replans.

5. Learning and Feedback

Advanced agents improve over time by learning from outcomes. This can be through:

- Memory: storing context and past interactions to avoid repeating mistakes.

- Human feedback: integrating ratings or corrections, similar to reinforcement learning from human feedback (RLHF).

- Self-reflection: some experimental agents “look back” at their reasoning and correct errors mid-task.

In practice, many agents maintain both short-term scratchpads (temporary context) and long-term vector databases to remember useful information across sessions.

Putting It Together

Most modern AI agents are a composition of:

- an LLM brain for reasoning,

- a toolbox of APIs and external systems,

- a memory for context,

- and an orchestrator (a framework like LangChain, AutoGPT, or IBM’s Watsonx Orchestrate) that manages the loop.

When you ask such an agent a complex question, you’re triggering a cycle of reasoning, tool calls, memory updates, and actions — often invisible to the end user but critical for success.

Example: IBM’s vacation-planning agent identified the best week to surf in Greece by:

- calling a weather database (perception),

- consulting a surfing expert agent (tool use),

- analyzing results with its reasoning engine,

- and storing the findings for future use.

This illustrates the promise: agents can autonomously orchestrate steps that a human would normally juggle manually across different systems.

What Are The Types of Agentic AI Systems?

Agentic AI can be grouped into two broad types: single-agent and multi-agent.

Single-agent systems carry out one workflow from start to finish. A company might use a single agent to process expense reports, manage IT tickets, or schedule meetings. These systems are simpler to design and monitor because they stay inside a well defined boundary. But the tradeoff is that they struggle when tasks involve too many variations or require coordination with other tools.

Multi-agent systems are more complex. Instead of one agent doing everything, they rely on a group of agents that divide the work. There are two common ways to organize them. In a vertical setup, agents are arranged in layers. A top layer agent assigns goals, while lower level agents handle smaller steps. This can make the system more efficient, but it also creates points of failure if the top layer mismanages instructions. In a horizontal setup, agents work side by side. Each one focuses on a specific task, like data extraction, quality checking, or customer response, and they coordinate to complete the whole job. This spreads risk but makes communication between agents harder to manage.

Both types are being tested in enterprise settings today. Single agents are practical when tasks are routine and predictable. Multi-agent systems hold more promise for large, complex workflows, but they are harder to build and more likely to fail without strong guardrails.

Why is Agentic AI Important for Businesses?

The appeal of agentic AI is straightforward: it promises efficiency, adaptability, and scale. Instead of manually coordinating systems, humans can hand goals to an AI agent and let it orchestrate the steps.

A Gartner survey from late 2024 found that 63% of enterprise AI leaders planned to pilot or deploy agent-based systems within two years, citing productivity and cost reduction as the main drivers.

What Are the Benefits of Agentic AI?

- Efficiency and Productivity

Agentic AI systems automate repetitive, multi-step workflows. McKinsey estimates that generative AI could automate activities accounting for 60–70% of employee time in some roles. An agent managing data entry, customer follow-up, or IT support tickets can run 24/7 without fatigue. - Personalization at Scale

Because agents perceive context and adapt, they can tailor interactions far beyond rule-based bots. E-commerce companies like Accenture already use agents that adjust recommendations in real time based on browsing behavior. - Scalability and Adaptability

Unlike human teams, agents can scale horizontally. If you need to handle 10,000 simultaneous customer chats, an agent can spawn sub-agents or manage queues without linearly increasing headcount. - Data-Driven Insights

Agents don’t just act – they generate logs of their decision process. This creates opportunities for analytics on reasoning chains, helping leaders see why certain decisions were made. - Cost Reduction

By automating repetitive work and reducing error rates, enterprises can redirect human labor toward higher-value tasks.

What Are the Common Use Cases of Agentic AI?

Companies are spraying agentic AI into almost every department, and the most common and successful ones so far have been:

Customer Service and Support

Agents handle multi-turn conversations, escalate when needed, and even take actions like processing refunds. Zendesk reported that their own support team resolves over 60,000 support requests every quarter using their agentic AI and Resolution Platform

Sales and Marketing

Lead qualification agents can autonomously score prospects, send outreach, and book demos. Instead of a static CRM rule, the agent learns which patterns signal buyer intent.

Finance and Supply Chain

In financial services, compliance agents monitor transactions and flag anomalies. In logistics, agents dynamically reroute shipments when they detect disruptions. DHL has explored agents that autonomously optimize fleet schedules in response to traffic and weather.

Healthcare

Agentic AI assists clinicians by retrieving patient records, cross-checking guidelines, and scheduling follow-ups. While human oversight remains mandatory, pilot studies show AI scheduling agents can considerably reduce administrative load.

IT and Operations

Self-healing IT agents monitor infrastructure, detect anomalies, and trigger fixes. Microsoft has demonstrated Copilot agents that automatically generate scripts to resolve system incidents.

Why Agentic AI Systems Fail?

Despite the promise, Agentic AI is still fragile in real-world settings. Even top research prototypes fail a majority of the time.

A 2025 Carnegie Mellon study found that state-of-the-art agents completed only 30% of multi-step office tasks reliably. Failures included getting stuck in loops, fabricating information, and even “cheating” — one agent renamed a user account to trick the system into thinking it found the correct person.

What Are the Common Agentic AI Failures?

- Hallucination and Fabrication

Agents generate false but confident statements. This can become very dangerous in regulated industries like healthcare or finance. - Task Misalignment

Agents pursue shortcuts that technically satisfy a prompt but violate the intended goal (e.g., changing data to “match” expected results). - Context Overload

LLM-based agents struggle with long contexts. They can miss important details when juggling multiple subtasks. - Security Exploits

Agents can be manipulated via prompt injection — attackers insert hidden instructions that hijack the workflow. - Resource Drain

Because each reasoning step may involve API calls or model inference, large-scale deployments quickly become expensive without optimization.

Why is Human Oversight Essential in Agentic AI?

Every serious industry pilot so far has come down to the same truth: agents cannot be trusted to run unsupervised at scale yet.

That’s why enterprises treat human-in-the-loop review as non-negotiable in sensitive workflows.

Evaluate AI Agents with SuperAnnotate

Making an AI agent better means constantly testing and evaluating it against the best. This is where SuperAnnotate helps enterprises. Our platform makes agent evaluation straightforward. With a customizable interface, teams can see every step an agent takes, review their decisions, and fine-tune workflows to build better datasets and improve performance.

In short, the future of agentic AI depends on systematic evaluation – and SuperAnnotate provides the scaffolding enterprises need to make agents trustworthy.

Book your seat for practical, hands-on workshop on how to evaluate and improve agentic systems.

What Are the Best Practices for Deploying AI Agents?

Enterprises experimenting with agents have developed a set of early best practices:

- Set Clear Goals

Define bounded tasks (scheduling, routing, ticket triage) rather than vague mandates like “optimize operations.” Ambiguity compounds failure. - Data Preparation and Integration

Agents depend on quality inputs. Dirty or incomplete data makes the reasoning loop brittle. Gartner notes that 57% of organizations say their data isn’t AI-ready. - Choose the Right Scope of Autonomy

Not every workflow benefits from a fully autonomous agent. Sometimes a semi-automated agent with human approval checkpoints is safer. - Ongoing Monitoring and Logging

Log every reasoning step and tool call. These traces are essential for debugging and compliance. - Human-in-the-Loop Evaluation

Use domain experts to review outputs. Annotations and QA feedback create ground truth for tuning. - Systematic Evaluation and Testing

This is the emerging frontier. Traditional ML evaluation (accuracy, F1) is insufficient for agents. Leaders are building scenario-based evaluation suites: test harnesses where agents are graded on realistic, multi-step tasks.

Recent Developments in Agentic AI

In the past few months, agents have been actively added to products people already use.

Market Moves

- Google built them into Search and AI Mode, which means a search can now end with booking a table or buying an item, not only showing links.

- Microsoft backed the Agent2Agent standard, which allows software from different teams to hand off tasks.

- Cohere released North, a setup that lets companies run agents while keeping their data private.

Cloud companies are putting money and staff into this area.

- AWS is creating a marketplace where businesses can find and use agents. Amazon formed a new group focused on AI and robotics.

- CoreWeave bought OpenPipe so its GPU cloud can support both training and running agents.

Enterprise Adoption of Agentic AI

For companies that want to use agents, the priority is making sure they behave safely.

- Rubrik bought Predibase to help customers build agents inside secure cloud platforms.

- UiPath bought Peak so it could offer agents designed for finance, retail, and other sectors.

- Cursor launched tools that stop coding agents from making changes outside the scope of a task. Privacy and security warnings are common.

- Meredith Whittaker said agents create risks of exposing sensitive data. TechCrunch security reporting cautioned against giving them wide access to files and systems.

Funding Trends

Investment is still strong but is directed at basic building blocks.

- Tavily raised funding to give agents controlled access to the web.

- Firecrawl raised money to improve how agents collect data online. Relevance AI raised to make it easier to run groups of agents together.

- Fundamental Research Labs raised for tools that work across multiple industries.

Browsers and Desktops for Testing Agents

Browsers and desktops have become testing grounds.

- Amazon’s Nova Act lets agents operate a browser directly.

- Opera added features to build agents inside the browser.

- Hugging Face released a free agent that can click and type like a person on a computer.

Hype Meets Reality

Not all of these efforts work out. A YC startup stopped building Windows desktop agents after running into technical limits. Many teams are instead making it easy for people to stay in control. Mixus built email and Slack controls. Nonprofits tried agents for fundraising. Shopify’s CEO advised teams to test AI tools before adding staff.

Executives and investors still disagree on what the word “agent” means. Airbnb’s CEO said agents will not replace search engines. Partners at a16z said they do not share one definition. TechCrunch reported on how the term is used in conflicting ways. Business models are also unclear. Reports suggest OpenAI may price some agents at thousands of dollars per month, while AWS is planning a marketplace model.

The common thread is that agents are being put to work in narrow, defined jobs where people can watch over them. They are useful for handling tasks like browsing, coding, or fundraising in a controlled setting. The broader idea of agents replacing whole job functions or search engines remains out of reach.

What’s Next for Agentic AI?

The consensus among researchers and enterprises is that the next big leap won’t come from bigger models, but from better evaluation.

Emerging trends include:

- Agent Evaluation Platforms: Dedicated tools like SuperAnnotate to measure robustness, bias, safety, and success rates under realistic workloads.

- LLM-as-a-Judge: Using one model to evaluate another’s reasoning at scale, reducing manual review.

- Simulation Environments: Running agents in sandboxed testbeds before deploying in production.

- Human Feedback Loops: Structuring enterprise workflows so subject-matter experts continuously annotate and validate agent outputs.

Six Lessons on Agentic AI After 1 Year of Adoption

McKinsey published a recent study based on over 50 agentic AI projects it led (plus dozens more in the market). They took six lessons that many early-adopters are learning the hard way.

- It’s about workflows, not just agents.

Focusing on the agent tool itself – “Look, it can act” – isn’t enough. Projects deliver better value when teams reimagine end-to-end workflows (people, processes, tech) around the agent’s scope. - Map user pain points first.

The best deployments start by charting current processes and identifying where pain exists. Then they build agents to remove the pain instead of trying to spray agents everywhere. - Embed feedback loops to improve behavior.

Every time a human edits an agent’s output (in document editing, ticket workflows, etc.), span that into a feedback mechanism (as in SuperAnnotate’s platform). That data helps refine prompts, tool logic, and knowledge bases over time. - Design for frequent use.

Agents realize value when they’re used often. Low-use agents cost more in overhead than they save. McKinsey finds that high frequency of use improves alignment, surfaces more edge cases, and yields better continuous learning. - Put UI & observability front and center.

Teams need visibility into what the agent is doing step by step, and easy ways to monitor decisions and deviations. Without observability, diagnosing issues, ensuring compliance, and keeping trust is hard. - Governance & ownership are essential.

Clear ownership, accountability, and governance structures (who owns the agent, who reviews performance, who handles failures) are non-negotiable. The lack of this pushes companies to blind zones.

Giving agents more autonomy makes mistakes carry more weight. A bad suggestion from a chatbot is annoying. A bad decision from an autonomous agent can lead to downtime, financial loss, or compliance problems very quickly. That’s why it’s important to learn from lessons and deploy wiser.

Final Thoughts

Agentic AI is moving into real business use, but the systems are still unreliable. Most agents fail on multi step tasks, which shows their limits. Without oversight, these failures can cause more problems than they solve.

The companies seeing value keep things simple. They use agents for narrow jobs, add human checks, and review results to improve the system over time. This makes agents useful today, even if they are far from perfect.

The future of agentic AI will depend on steady improvements in testing, governance, and monitoring. Bigger models alone will not fix the core issues. What matters is making agents dependable enough to handle real work without constant breakdowns.

Right now, agents are helpful tools that need supervision. With careful design and control, they can start to deliver more tangible benefits.

Common Questions

This FAQ section highlights the key points about agentic AI.