Most ML projects begin with a spreadsheet. It feels like the obvious choice when you just need a place to track what is being labeled, who is doing the work, and how much progress has been made. You can quickly set it up and share it with the teams without procurement delays. For small pilots, that simplicity is exactly what you want.

The reality, though, is that spreadsheets eventually reach their ceiling when you scale. They start dropping hidden costs into your workflow and slowly become a big pain. At the same time, there are plenty of cases where spreadsheets remain the reasonable choice. The trick is knowing which side of that line you are on.

Many of our clients ran into the limits of spreadsheets before moving to an annotation tool. In this piece, we’ll share when spreadsheets work, when it makes sense to switch, and the challenges our customers ran into as they made the switch to SuperAnnotate

Why Spreadsheets Make Sense in the Beginning

A spreadsheet is universal. Everyone knows how to use it, vendors can pick it up without training, and it’s easy to start with. That makes it a good fit when you are still figuring out the basics of a project.

Even established teams keep spreadsheets in play for smaller tasks. They can be the right tool for quick evaluations, side projects, or one-off labeling jobs. If you only need a few thousand examples to test an idea, a sheet may actually be the fastest and cleanest path.

So the case for spreadsheets is not a weak one. They are the right answer when the cost of coordination is low and the goal is speed over process.

The Slow Build of Spreadsheet Pain

The problems do not usually appear in one dramatic moment. They build over time, and they often show up as model issues. A drop in performance sends the team digging, only to discover that the root cause sits in data.

Here are the main spreadsheet issues that our customers struggled with before coming to SuperAnnotate.

No separation of roles

One of the biggest problems with spreadsheets is the lack of role separation. You cannot easily support workflows where multiple people work on the same item, such as annotator followed by QA. The sheet cannot differentiate who did what. Trying to duplicate rows for each stage or leaving comments inside a single cell quickly becomes unmanageable.

Easy to break with scale

When the dataset grows large, it becomes difficult to keep track of everything. We heard this from almost every customer who previously worked in spreadsheets. With thousands of rows, it is easy to edit a cell by mistake and corrupt work that others depend on. Some teams try splitting the dataset into separate spreadsheets for each annotator, but that makes tracking even harder. Copying and pasting rows introduces errors, and reconciling all the files adds more overhead.

Item and people management

Managing data items and annotators inside a spreadsheet is difficult once you add real workflows. Multi-stage pipelines such as Annotation → QA1 → QA2 are nearly impossible to run. At best you end up moving items manually between sheets, which is slow and error-prone. It is also very hard to ensure multiple reviewers see the same item when that is required for quality.

No visibility control

Everyone with access to the spreadsheet sees everything. There is no way to scope views so that each person only works on their own items. For projects with vendors or external contributors, that lack of control becomes risky and creates confusion.

Difficult IAA and QA tracking

One of our enterprise clients who’s now using SuperAnnotate for model eval said that inter-annotator agreement was almost impossible to measure in spreadsheets. They had no reliable way to see how often annotators disagreed or whether reviewers were consistent.

Poor support for complex tasks

Spreadsheets can handle very simple labeling tasks, such as text markup or classification. Beyond that, they fall apart. Images, video, and audio are not practical to annotate in a table. Complex markup like code annotation is also nearly impossible to manage this way. Some companies have tried to push spreadsheets further, but the workarounds never really scaled.

Weak analytics

Spreadsheets offer only basic sorting and filtering. If you need to search for specific items, track annotator accuracy, or share problematic examples, the process is limited and clunky. By contrast, platforms can surface these insights instantly.

Security concerns

Spreadsheets create risks for sensitive data. Google Sheets always sends your data to Google’s servers, which many clients cannot accept. There is also no easy way to integrate with private or on-premises storage systems. A platform can enforce secure storage and proper access control, while spreadsheets cannot.

What Platforms Change

The issues that weigh down spreadsheets can be solved once the work runs inside a system built for annotation.

Spreadsheets can’t separate roles, so annotators and reviewers step on each other’s work. A platform makes those stages explicit. One person labels, another reviews, and the system knows who did what. You don’t have to duplicate rows or hide comments in cells to track progress.

Accidental edits stop being a constant risk. In a sheet, one wrong click can change a label or break a formula for everyone. In a platform, items are locked to the person working on them, and edits are logged. That simple control removes a lot of uncertainty.

Visibility becomes manageable. Instead of every contributor seeing the whole dataset, a platform scopes work to the right group. An annotator sees only their batch. A vendor sees only their assigned project. You don’t need to juggle dozens of separate spreadsheets just to keep data partitioned.

Complex tasks that require multiple data types are possible in the right purpose-built tools like SuperAnnotate.

Multi-stage workflows stop being manual. If a project requires Annotation → QA1 → QA2, a platform can route items through those stages automatically.

Analytics becomes more straightforward and reasonable. Instead of building scripts to track annotator accuracy or agreement, those numbers appear inside the workflow. You can search for items, flag problems, and pull up examples without leaving the system.

Finally, security concerns can vanish if you choose the right tool. Data stays in the storage you choose, access is tied to roles, and audit logs keep track of changes. For teams dealing with sensitive material, this removes a major barrier.

The Tipping Point Toward a Platform

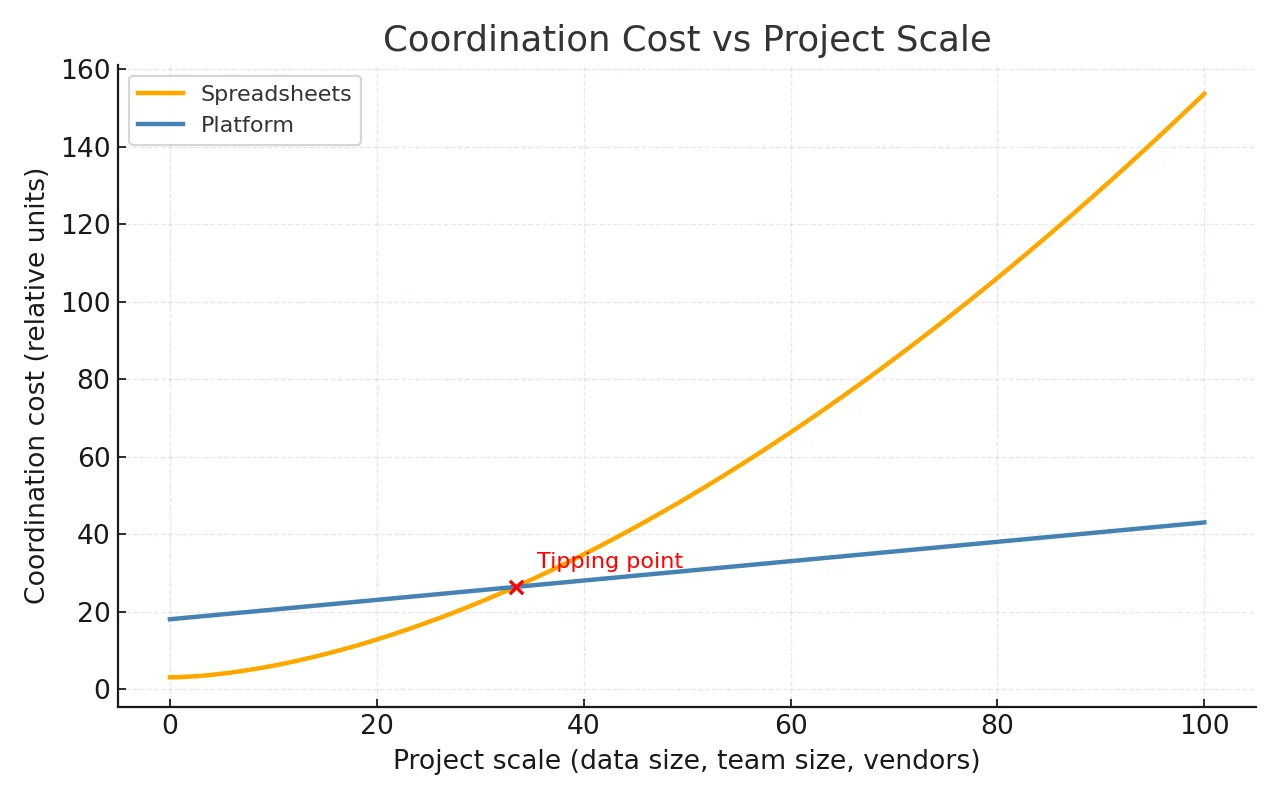

The case for moving comes when projects grow – more data, more people, and constant changes make spreadsheets too costly to manage.

You are usually at that point when multiple vendors are working at once, when taxonomy updates are hard to track, or when you cannot explain which dataset version produced a model result.

It is not just about size. A single vendor labeling ten thousand items on a stable schema may do fine in a sheet. Fifty thousand items across three vendors with guidelines shifting every few weeks will not.

How Taranis Moved from Spreadsheets to SuperAnnotate

Taranis, a precision agriculture company, needed to turn massive volumes of drone and satellite imagery into clear agronomic recommendations with GenAI. Their goal was to give farmers quick, reliable insights on crop health, weeds, and disease – all before the planting season began.

Like many of our customers, Taranis started in spreadsheets. For them, the pressure came from a seasonal deadline. Slow QA and fragmented reviews made spreadsheets unworkable, so they needed a platform that could move faster.

With deadlines approaching, Taranis turned to SuperAnnotate. By centralizing annotation and review, agronomists could work directly in a custom no-code UI, flag errors, and refine outputs without waiting on developers.

“Other platforms required front-end developers just to get started. With SuperAnnotate, I could do it all myself,” said Itamar Levi, Lead Agronomist.

In six months, the team had a GenAI assistant running at 95% accuracy. Annotation review time dropped by 80%, the dataset expanded 50x, and the product launched in time for the season.

How to Test an Annotation Tool

If you’re moving from spreadsheets to tools, there are a few criteria to consider to land the best option. Annotation tools vary in terms of modalities, automation support, active learning, collaboration features, etc.

A few criteria to consider when choosing an annotation and eval tool:

- Data & task coverage

- Modalities you use today and expect in 6–12 months (CV, text/LLM, audio, PDF, 3D/point cloud).

- LLM workflows: side‑by‑side ranking, chat evals, preference collection, prompt tooling.

- Quality & evaluation

- Multi‑stage review, consensus/IAA, golden sets, auto‑QA rules, evaluator guidance and analytics.

- Model‑in‑the‑loop & automation

- Pre‑labeling with foundation models, active learning loops, event webhooks, SDKs.

- Collaboration & operations

- Workflow management, task assignment and tracking, in-platform comments and clarifications.

- Customizable evaluation UIs with role-based views.

- Analytics on reviewer consistency, model performance, and prompt issues.

If you’re looking for an annotation tool that supports multimodal use cases, offers a customizable evaluation UI, includes automated pre-labeling, and provides advanced QA with analytics, you can book a SuperAnnotate demo to test it against your needs.

Thinking in ROI Terms

Spreadsheets look free but consume hidden labor.

At small volumes, these costs are minor. But once you handle hundreds of thousands of items per month, the numbers quickly change.

Take a team labeling three hundred thousand items monthly. At five hundred items per annotator per day, you need thirty annotators. If a platform raises throughput to six hundred items, you need twenty five. That is a reduction of five full-time equivalents every month. Now consider re-work. Dropping from ten percent to three percent removes more than twenty thousand corrections. Add in engineering hours saved from not merging CSVs or fixing schema drift. The license fee becomes the smaller number.

Still, if you are running small or infrequent projects, the math will not work. That is why spreadsheets continue to make sense in those cases.

Final Thoughts

Spreadsheets have their place. They are quick to set up and simple for small pilots or one-off jobs. But once projects grow, they start slowing teams down. Errors creep in, data gets messy and more time goes into fixing work than moving forward.

A platform does not remove every challenge, but it adds structure and history that a sheet cannot. It keeps teams aligned when data and people multiply.

The decision is not all or nothing. Many teams still use spreadsheets for small side tasks while running their main pipelines on a platform. The key is knowing when a sheet is enough and when it becomes the bottleneck.