Generative AI is reshaping how enterprises operate—from powering self-driving cars with computer vision to improving customer support through language models. But no matter how advanced the models are, their success still depends on one foundation: high-quality training data and careful model evaluation.

It’s common (and makes good sense) for AI teams to rely on multiple annotation vendors. Different vendors often bring specialized expertise, language capabilities, or cost benefits. The issue isn’t using multiple vendors—it’s managing them separately. Fragmented setups can cause delays, inconsistent quality, disconnected workflows, and headaches for your team.

This article explains why managing all your data annotation vendors in one platform is becoming a strategic priority for AI leaders. By bringing all your vendors into a single environment, you can accelerate time-to-market, boost data quality, and significantly reduce operational costs and complexity. This is the first in our short blog series on tackling the challenges of multi-vendor annotation—starting with why consolidation is the foundation for doing it right.

The Multi-Vendor Dilemma

Engaging multiple vendors is often necessary for projects that require specific domain expertise, language-specific capabilities, or to extend labor capacity. Yet it also creates significant operational overhead:

Siloed Setups Make AI Work Harder Than It Should

Each vendor typically uses its own annotation platforms, tools, and file formats. When it’s time to merge deliverables, project managers and engineering teams face the tedious task of reconciling schemas, taxonomies, and data structures. Often when different vendors are introduced to fill specific roles in the pipeline, their work happens in silos—making unification difficult. Without a shared “source of truth” for annotation standards, inconsistencies creep in, and AI teams are forced to spend extra time on quality checks. It’s no surprise that 45% of companies now cite inconsistent formats and definitions—often caused by juggling multiple vendors—as a major obstacle to scaling their AI efforts.

This patchwork of systems isn’t just inconvenient; it can introduce errors that limit the quality of the AI application and severely slow down the entire development cycle.

Lack of Standardization Means Poor Quality Control

With multiple vendors, there’s no shared QA framework or consistent performance metrics. One provider might use a multi-step quality check while another takes a single-touch approach. Without standardized workflows, it's hard to judge who’s actually delivering high-quality outputs—or to enforce uniform quality across the board.

No Real-Time Visibility = Missed Issues

The “black box” effect is real. Each vendor may have their own reporting tools and timelines, meaning you might not detect quality issues until final delivery. That often means delays and costly rework. Worse, there’s no clear visibility into real-time progress, making it harder to monitor throughput, reassign tasks, or course-correct when things fall behind. Project managers waste valuable time chasing updates instead of focusing on higher-impact strategic work.

Note on QA: Low-quality data can easily slip through the cracks in a multi-vendor setup if there isn’t a centralized way to enforce standards and catch errors early. Catching issues too late often means reworking entire datasets—delaying model launches and inflating costs.

Admin Sprawl Drains Strategic Resources

Every additional vendor introduces another cycle of contract negotiation, security reviews, and onboarding for each tool. Procurement, legal, and IT teams get bogged down with repetitive processes for each vendor. Over time, these cycles drain resources and delay project start times. Even coordinating project setup and access permissions across different platforms consumes bandwidth that could be better spent on actual AI development.

This fragmentation isn’t just a technical annoyance; it has real business consequences. When teams can’t quickly identify bottlenecks or reassign tasks to available resources, operational costs rise and time-to-market extends—especially problematic if your competitors are moving faster in the GenAI space. Low-quality data can also slip through the cracks in a multi-vendor setup without a centralized QA standard, leading to expensive rework and missed deadlines.

Run Every Annotation Vendor on One Platform

High-performing AI teams have stopped hopping between five different labeling portals and spreadsheets. Instead, they use an enterprise workspace—think SuperAnnotate—where every external and internal labeling team plugs into the same toolset, the same taxonomy, and the same reporting layer. Centralizing your annotation supply chain this way turns a patchwork of hand-offs into one coordinated pipeline.

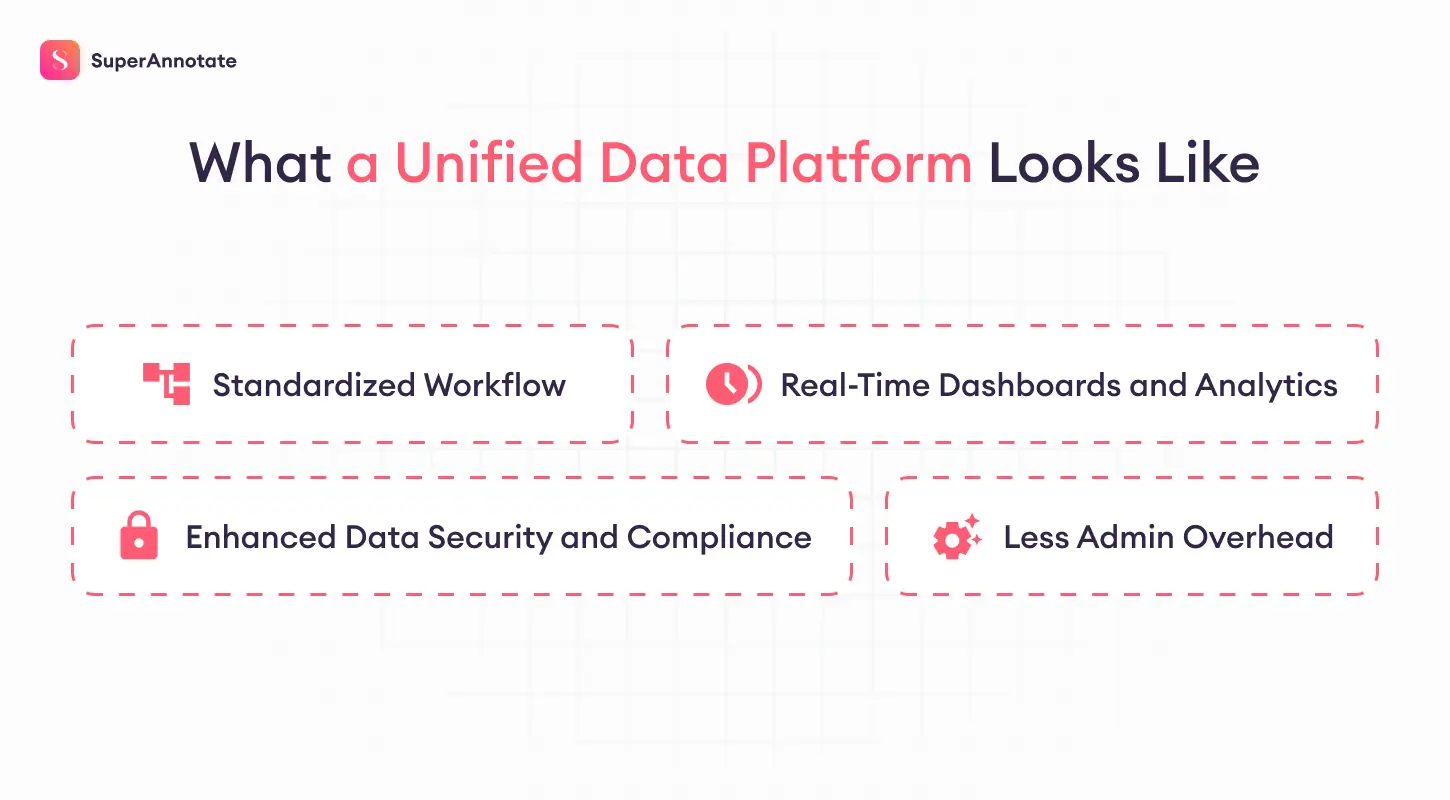

Consolidation doesn’t lock you to a single vendor; it simply gives every annotation vendor, internal labeling team, reviewer, and stakeholder one shared toolkit for their work and enables:

- Standardized Workflow

When everyone uses the same interface and follows the same protocols, quality and consistency improve dramatically. The platform helps enforce uniform taxonomies, QA checklists, and labeling guidelines across all projects, ensuring every vendor adheres to the same high standards.

- Real-Time Dashboards and Analytics

A unified “command center” offers immediate insight into the progress and performance of each vendor. This allows project leads to identify lagging teams, re-allocate tasks, or intervene before minor issues become major setbacks.

- Reduced Administrative Burden

Carrying out annotation RFPs in a single platform centralizes much of the setup process. Instead of spinning up multiple environments and negotiating unique workflows for each vendor, you can simply create a project, invite approved vendors, and monitor their output in real time. This greatly simplifies new RFP processes and day-to-day oversight, freeing up both procurement and AI teams to focus on more strategic activities.

- Enhanced Data Security and Compliance

Maintaining compliance with data privacy regulations (e.g., GDPR, HIPAA) can be challenging when you’re juggling multiple vendor relationships. A single platform with built-in security features ensures consistent compliance across all vendors—reducing risk and safeguarding sensitive data.

Looking Ahead

Relying on multiple vendors is a smart move in the GenAI race—but managing them across disconnected environments often slows you down and drains resources you didn’t plan for. The enterprises that stay ahead aren’t necessarily using fewer vendors—they're managing them through a single, unified platform that keeps everything aligned and efficient.

Consolidating annotation vendors isn’t just about cutting costs—it’s about gaining control. With a unified platform, clearer accountability, and real-time insights, enterprise AI teams can move faster, deliver better models, and reduce operational friction.

In upcoming articles, we’ll break down other pain points caused by vendor sprawl—starting with the inefficiencies of repeated RFP cycles. If you’d like a preview of how unified annotation management might work for your team, feel free to reach out for a demo.