Large language models (LLMs) are the talk of the tech town, and for a good reason. They stormed so many industries with models like ChatGPT in such a short amount of time, leaving people impatient for what’s yet to come. In fact, 78% of enterprises plan to adopt large language models and generative AI as part of their AI transformation initiatives this fiscal year. LLMs can craft texts that sometimes surpass human imagination, attracting industries from retail, e-commerce, marketing, and advertising to healthcare, finance, and almost any industry you might think of.

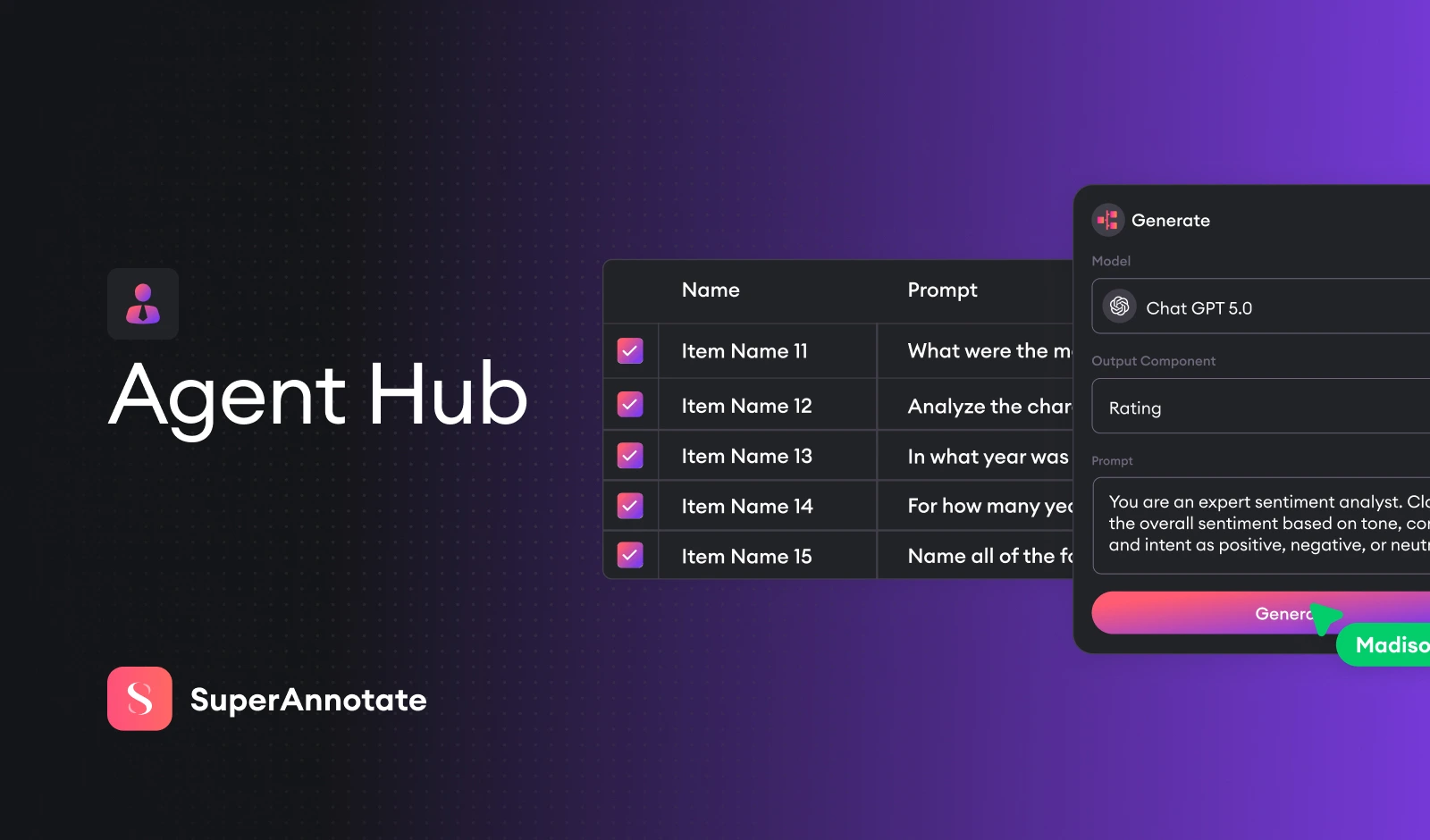

SuperAnnotate could not miss out on this, so we decided to build the most flexible LLM toolbox to cover a wide range of business use cases. In this article, we’ll demonstrate SuperAnnotate’s LLM custom editor, its incredible customizability, its uniqueness, and the use cases it covers.

LLMs in SuperAnnotate

There are many exciting things to cover about SuperAnnotate’s LLM editor. Its flexible design allows it to cover an extensive range of use cases across various industries, and we’re about to learn what’s so special about the tool.

Why choose SuperAnnotate’s LLM toolbox?

SuperAnnotate’s toolbox has a lot to offer, no matter your use case or industry.

1. Experiment, experiment, experiment: In the realm of large language models, experiments are very important. A good LLM toolbox has to allow you to quickly try things out—changing, removing, adding, reviewing. You need this in order to craft the data in the best form you want, which may require several iterations. And this needs to be quick and seamless. Lack of adaptability would not only slow down the pace of development, inflating the operational costs but also stifles creativity from building innovative solutions and achieving top-notch data due to the bothersome process of making changes. That is why SuperAnnotate’s custom LLM toolbox revolves around rapid iteration—testing, refining, and re-testing in quick succession.

2. Data curation: Data curation is an utmost priority for our tool. Language models like GPT are famous for the inaccuracy and bias that they produce with pretty high confidence. This is an issue for any industry, especially security, finance, healthcare, and others. For this reason, our LLM tool puts a strong emphasis on data curation—we make sure to remove the trash and bias from the client’s data, filter data to keep what’s important and train the large language model only on the relevant parts of data.

3. Data governance and security: Large language models, in general, are hungry for data, and public models “eat” your data once it’s put in the prompt and use it for training. Many other custom LLM tools put clients’ data on their servers for further development, which essentially means your data is in other people’s hands. Data governance and security are significant factors that enterprises take into consideration when choosing an LLM annotation platform. For this, data governance is at the center of everything SuperAnnotate does. We keep everything from initial data to annotated data only on the client’s database, ensuring your data doesn't leave the organization's infrastructure, mitigating risks of exposure.

4. Customizability: This is one of our greatest features —SuperAnnotate’s LLM editor has a highly customizable interface and seamless API integration. This comes together with the experimentation we just talked about. SuperAnnotate’s toolbox leaves room for creativity and freedom to create what serves your business needs the best and design the LLM of your dream.

5. Trained on domain-specific datasets: By their nature, language models can’t perform enterprise-specific tasks. However, SuperAnnotate’s tool allows you to train the model with your data according to your specific requirements. This ensures the model has proper domain knowledge and, thus, higher accuracy in niche areas compared to general models like GPT.

SuperAnnotate’s LLM use cases

Let’s now drive to the heart of the matter; what use cases does SuperAnnotate’s LLM tool cover? This article covers the simplest yet fundamental use cases you can implement with our tool, but keep in mind that you’re free to build a lot more than that. Our tool is flexible by design, allowing you to build the best LLM for your specific use case. Let’s see how.

LLM playground

SuperAnnotate introduced a new LLM playground where you can try ready-made templates for your LLM and GenAI use cases, such as chat rating, RLHF for image generation, model comparison, and GPT fine-tuning. You can even create a custom template for your specific use case. Just click on the templates to try them. Keep on reading to learn more about each use case and how to use the corresponding template in the playground.

Use case 1: Chat rating

Chat rating is getting more popular for a few reasons. When using ChatGPT or other types of language models, we notice many mistakes, biases, or other elements that affect the quality of the response. Now imagine this on a large scale—being able to rate the chat you’re having with the model and improve the performance on the training level. Why is this so useful? Reviewing and rating the model's responses allows you to fine-tune the model to generate content that aligns better with your preferences and requirements. This can be especially valuable if you are using AI-generated content for specific purposes such as content creation, customer support, or research. Having a chat annotation system ensures continuously better responses from the chat and more advanced prompts over time.

For chat rating, as well as for other use cases, you can either start building from scratch or just use the template on our website. Here’s the chat rating template.

This is what it looks like in action. First, you’re building your custom use case. The great thing here is that you can import the chat form through a JSON file directly to the tool, just like it’s shown in the video. Next, you name the labels like “Rate the prompt” and “Rate the answer” and click “Publish.” At this point, you’ll need to specify the number of items based on your custom form in order to annotate them. Specify the number of items, name them, and click “Publish and generate”.

Then, you need to annotate the chat. Say you select the GPT 4 model, and your prompt is "What might be the biggest technological innovations by 2030?" After clicking “Submit,” you can rank both the prompt and the output.

Use case 2: RLHF for image generation

Reinforcement learning from human feedback (RLHF) is another hot topic today that we integrated into our tool.

Let’s say you’re a marketing agency building advertisement campaigns and thinking of ways to speed up your production. One way to do that is by hiring a creative team that would generate and brainstorm ideas and build the designs. Or you can spend a few seconds in our LLM tool and seamlessly get top-notch results.

The RLHF for image generation template:

The first step is building the UI. Here’s the amazing thing about the tool—just look at the set of builders you can use to customize your form. For this RLHF case, we’ll build it this way.

Input => button => select => images in your desired number => annotation in ranking form => annotation in text form

Next, we need to rename the components according to our use case.

And here’s the template in practice. Let’s say you’re creating advertisement posters for Lego. You think that the Lego construction of the Great Wall of China would be an amazing idea for a poster. But you don’t want to be limited to just a few options. For this kind of project, you want to rank your model’s outputs and have a textual annotation on why the highest-ranked output is the best. In the Lego case, we find the third option to be the best because it captures the mesmerizing building in its full essence.

The great thing about this use case is the wide range of opportunities it offers. This can be scaled to a big marketing campaign of building creative artsy images for any use case and have the best deliverable due to the annotation and ranking feature.

Use case 3: Model comparison

Suppose you want to receive the best result possible for your queries and want to test a few of the existing models like ChatGPT, Cohere, Bard, etc., and choose the one that fits best for you.

The model comparison template:

Otherwise, you start building the template. The input box is for the prompt. After clicking the button, you’ll receive outputs from different models. Again, the number of models to generate the output and the model choice is up to you.

Once you rename the components and choose the models that you want (in our case Cohere and ChatGPT), it’s time to publish the model. You need to specify the number of items, name them, and publish them.

Let’s observe the outputs of the two models. Cohere gives straightforward, short answers; meanwhile, Chat GPT gives more detailed and comprehensive answers. Depending on the purpose of the case, annotators will rate the response accordingly. We can put two types of ratings here; star ranking and comprehensiveness ranking.

Here’s how the GPT fine-tuning template works.

Build your own use case

We said it a few times already, but it’s worth mentioning again: SuperAnnotate’s LLM editor is highly customizable. This means that apart from the use cases we talked about, you’re free to build your own.

With the “Build your template” option, you can customize your use case, from building your model from the ground up to curating the perfect data for fine-tuning. This tool revolutionizes how you deal with language generative models, empowering you to create, adapt, and optimize according to your unique needs and aspirations.

How’s the tool working under the hood?

In this GitHub repository, you may find the codes working under the hood to operate the above-mentioned use cases. You’ll find a step-by-step explanation of the setup process of the three use cases in the readme files.

A big note here! With the SuperAnnotate LLM toolbox, LLM engineers can define UI behavior right in the browser using Python, a departure from the traditional JavaScript methods. This shift leverages the prevalent Python expertise in the LLM community, making the development process smoother and more intuitive.

Conclusion

What we presented in this article is just a small portion of what’s possible to build with SuperAnnotate’s LLM editor. We offer this tool as software for businesses that are planning to build their large language models—providing them with the best quality training data with top-notch data curation as well as data governance and security. The tool’s high customizability gives clients the freedom to build an LLM that meets their business requirements. LLMs have gained hype in recent months in almost every industry, and if you want to add LLM value to your product, request a demo and enjoy the journey.