Flo Health is the leading women’s health platform, trusted by over 280M users worldwide. Its mission: deliver safe, accurate, and personalized health insights. To advance that mission, Flo built AskFlo, an AI-powered assistant designed to provide medically sound answers to sensitive health questions.

The Challenge: Operationalizing Clinical Expertise for LLM Safety

Everyone is building with AI. But in safety-critical domains like women’s health, off-the-shelf models fall short. General-purpose LLMs can’t reliably capture the clinical expertise required to avoid harmful errors.

Flo's team needed to leverage medical experts to:

- Validate 12,000+ LLM outputs against clinical guidelines.

- Meet a >90% accuracy threshold for safety and trust.

- Reduce iteration cycles from weeks to days to stay on track for launch.

Fragmented manual tools made clinical expertise hard to capture and impossible to scale. The bottleneck wasn’t the model, it was sourcing and operationalizing the knowledge of a large team of medical experts into a repeatable, production-grade evaluation pipeline.

The Solution: SuperAnnotate as the Expert-in-the-Loop Platform

Flo turned to SuperAnnotate, the data platform for domain AI, to put its medical experts directly in the loop of model evaluation.

By integrating SuperAnnotate + Databricks, Flo built a robust, expert-powered evaluation pipeline that:

- Maximized expert value by translating clinical judgment into structured rubrics and workflows.

- Ensured consistency by precise of data to experts and honeypot QA checks

- Cut iteration cycles from 2–3 weeks down to 3–5 days.

- Seamlessly fed evaluation results into Databricks’ Mosaic AI & MLflow pipelines, powering continuous model refinement.

Results: From Bottlenecks to Breakthroughs

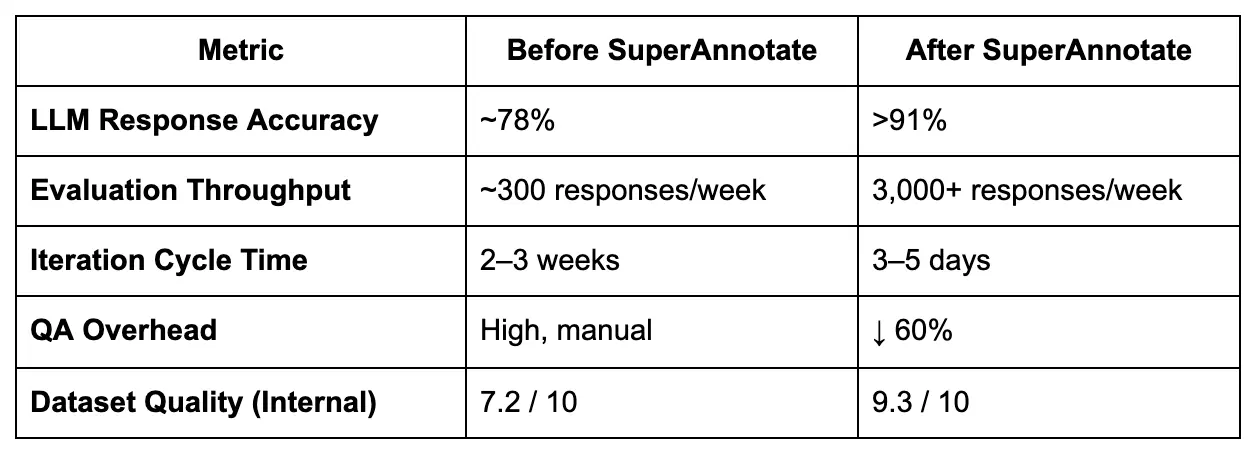

Within weeks, Flo’s evaluation pipeline went from manual bottlenecks to production-grade scalability:

These improvements unlocked a 10x throughput boost, accelerated time-to-market, and gave Flo’s medical team the confidence that AskFlo met clinical safety standards.

Why Flo Chose SuperAnnotate

Flo evaluated other platforms but chose SuperAnnotate for its ability to:

- Turn expertise into production-ready AI with customized workflows designed around Flo’s clinical protocols.

- Automate the grunt work, not the expertise, freeing reviewers to focus on edge cases.

- Scale from pilot to data factory, enabling both small test runs and enterprise-grade evaluation pipelines.

Looking Ahead

With AskFlo now deploying globally, Flo plans to:

- Expand into multilingual evaluations.

- Apply RLHF (reinforcement learning from human feedback) for deeper model alignment.

- Further automate safety QA loops with SuperAnnotate.

Final Takeaway

In healthcare, accuracy isn’t optional. With SuperAnnotate, Flo Health built an expert-powered evaluation engine that combined clinical safety with production-scale AI development.

The result:

- 91%+ accuracy validated at scale.

- 10x faster evaluations.

- Rapid iteration cycles that bridged clinical, data, and engineering teams.

Flo’s success proves that in high-stakes AI, the winning formula isn’t a bigger model—it’s operationalizing domain expertise with SuperAnnotate.

“SuperAnnotate enabled us to transform deep medical expertise into scalable, structured ground truth data within our Databricks pipeline, driving 10x evaluation throughput, rapid 5-day iteration cycles, and a new level of alignment between clinical, data, and engineering teams.”

— Roman Bugaev, CTO, Flo Health